NVIDIA GPU Test Drive

Try NVIDIA GPUs with Microway with Your Application

Access a remote testing environment for AI Cluster Software (NVIDIA and beyond), execute remote testing on a private NVIDIA GPU Server, or access a full cluster software suite. Perform a GPU test drive with Microway.

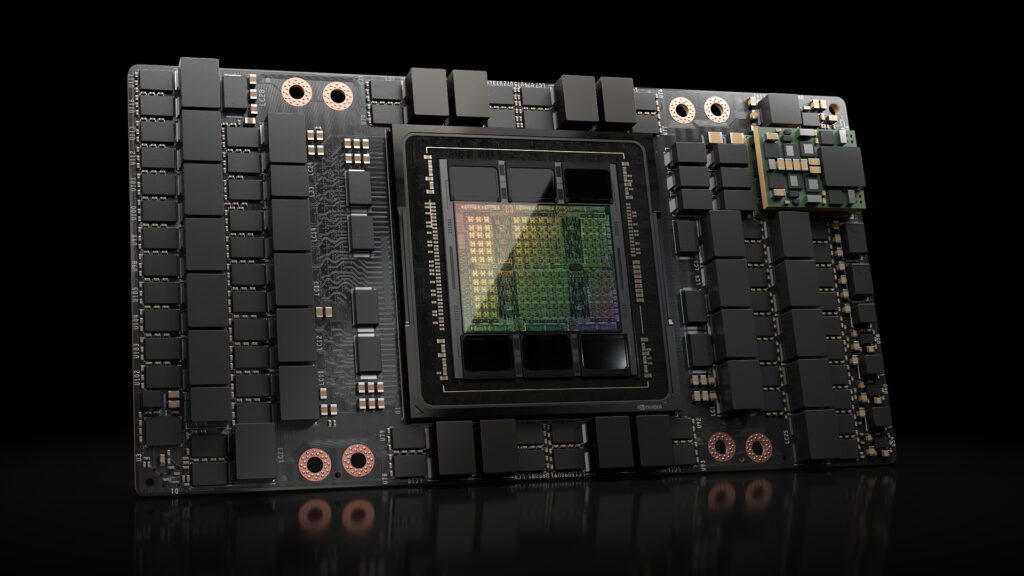

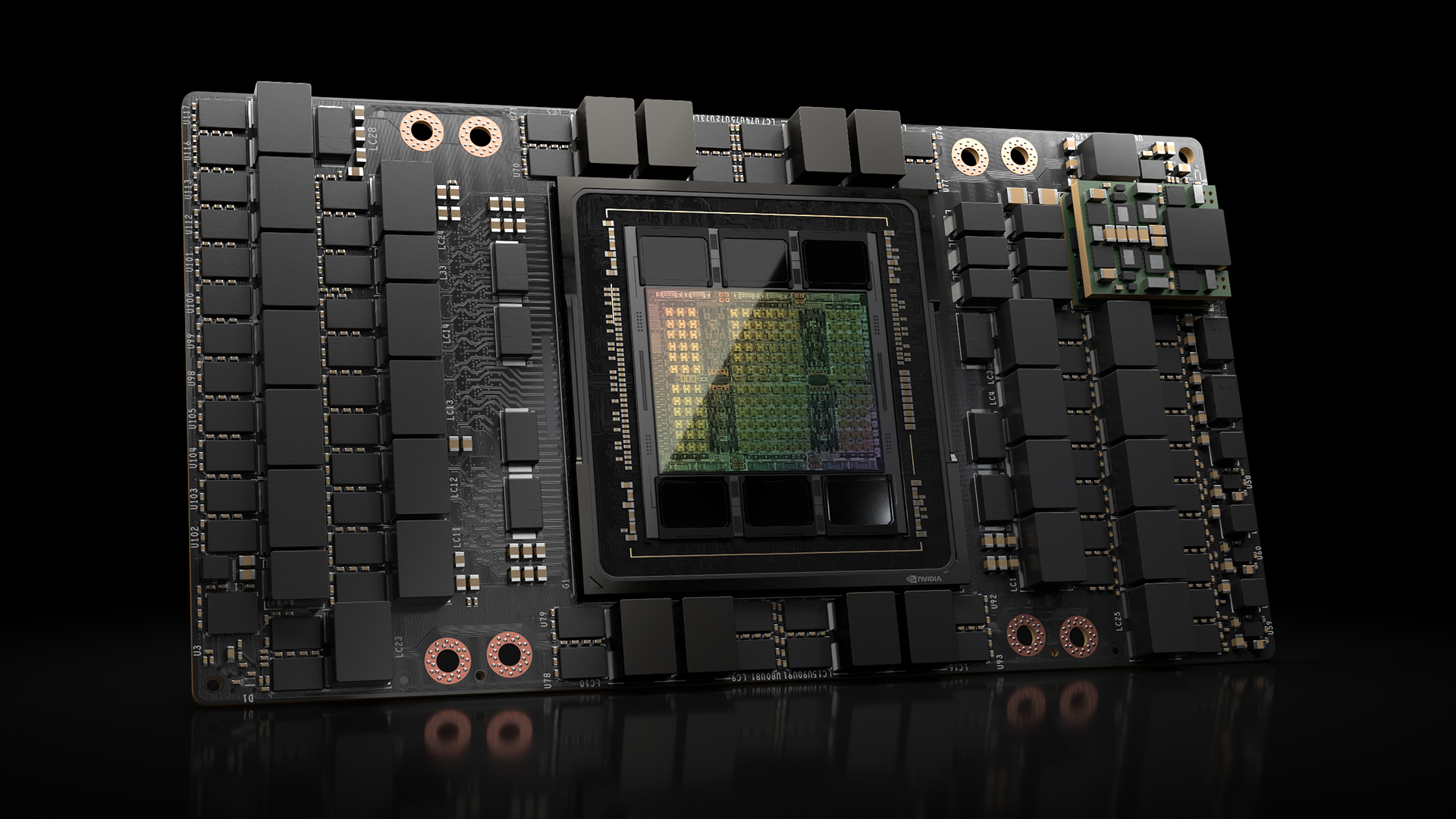

Available GPUs and System Types

Regardless of instance, Microway offers a variety of available GPU types, server models.

- NVIDIA H200, NVIDIA H200 NVL PCI-E, NVIDIA RTX PRO 6000 Blackwell, NVIDIA RTX 6000 Ada, NVIDIA L40S

- NVIDIA DGX Systems (NVIDIA DGX H200, more)

- Fully configurable systems with NVIDIA PCI-E Datacenter GPUs (1-8) or NVIDIA Datacenter GPUs with NVIDIA NVLink

- Storage and data planes to pair with NVIDIA GPU clusters

AI Cluster Software Environments

NVIDIA AI Enterprise

An NVIDIA NGC-enabled environment with frameworks pre-installed and readied with NVIDIA AI Enterprise—that simplifies end-to-end AI workflows

NVIDIA RAG Blueprint

An environment with a Retrieval Augmented Generation workflow pre-installed and ready for customization.

LLM Router

Send a request to the right LLM model for response time, reasoning depth, or best computational price/performance. Replicate real choices of model that you would use in designing production environments.

Something Else?

Looking for or an AI or HPC instance with special software? Don’t fit any of these options? We take custom requests.

- Most NVIDIA NGC containers

- Many GPU options

Private Testing Servers

Private GPU Server Access

Request exclusive access to an NVIDIA GPU server for remote benchmarking.

We will try to accommodate your requested GPU and system type as best possible.

Private Access to DGX H200

Request exclusive access to an NVIDIA DGX H200 for remote benchmarking or evaluating the NVIDIA DGX software stack.

Workstations or Workstation GPUs

Request exclusive access to a workstation or NVIDIA workstation GPUs.

We will try to accommodate your GPU and system type as best possible.

Something Else?

Don’t fit any of these AI environment templates? We take custom requests.

- Many GPU options

- Host System Configurations

Shared Cluster Environments

SLURM GPU Cluster

If you’re comfortable with a SLURM scheduler, a shared system, or want to see what Microway’s default cluster software load-out can do, we have instances ready for rapid login.

Kubernetes GPU Instance

Try a generic Kubernetes-based cluster.

NVIDIA Mission Control

Try an NVIDIA Mission Control with Base Command Manger and Run.ai environment. End to end AI Cluster management and workload orchestration with a full-NVIDIA stack.

Something Else?

Want to try the workflow and usability of a different cluster stack? Our team can help with a custom request.

- Singularity Containers

- Open OnDemand