As one of NVIDIA’s Elite partners, we see a lot of GPU deployments in higher education. GPUs have been proving themselves in HPC for over a decade, and they are the de-facto standard for deep learning research. They’re also becoming essential for other types of machine learning and data science. But GPUs are not always available to students, particularly undergraduate students.

GPU-accelerated Classrooms at MSOE

One deployment I’m particularly proud of runs at the Milwaukee School of Engineering, where it is used for undergraduate education, as well as for faculty and industry research. This cluster leverages a combination of NVIDIA’s Volta-generation DGX systems, as well as NVIDIA Tesla T4 GPUs, Mellanox Ethernet, and NetApp storage.

Rather than having to learn a more arcane supercomputer interface, students are able to start GPU-accelerated Jupyter sessions with the click of a button in their web browser.

The cluster is connected to NVIDIA’s NGC hub, providing pre-built containers with the latest HPC & AI software stacks. The DGX systems do the heavy lifting and the Tesla T4 systems service less demanding needs (such as student sessions during class).

Microway’s team delivered all of this fully integrated and ready-to-run, allowing MSOE’s undergrads to get hands on the latest, highest-performing hardware and software tools. And they don’t have to dive down into huge levels of complexity until they’re ready.

Multi-Instance GPU amplifies Remote Learning

What changed this month is that NVIDIA’s new DGX A100 simplifies your infrastructure. Institutions won’t need one set of systems for the most demanding work and a separate set of systems for less intensive classes/labs. Instead DGX A100 wraps all these capabilities into one powerful and configurable HPC/AI system. It can handle anything from a huge neural network training to a classroom of 56 students. Or a combination of the two.

What changed this month is that NVIDIA’s new DGX A100 simplifies your infrastructure. Institutions won’t need one set of systems for the most demanding work and a separate set of systems for less intensive classes/labs. Instead DGX A100 wraps all these capabilities into one powerful and configurable HPC/AI system. It can handle anything from a huge neural network training to a classroom of 56 students. Or a combination of the two.

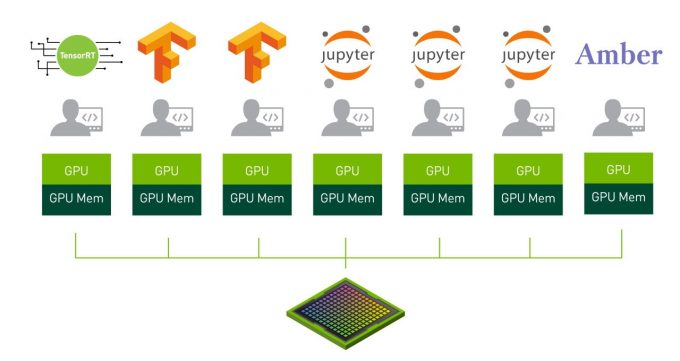

NVIDIA calls this capability Multi-Instance GPU (MIG). The details might sound a bit hairy, but think of MIG as providing the same kinds of flexibility that virtualization has been providing for years. You can use the whole GPU, or divide it up to support several different applications/users.

DGX A100 is the only system currently providing this capability, and provides anywhere from 8 to 56 GPU instances (other NVIDIA A100 GPU systems will be shipping later this year).

The diagram below depicts seven students/users each running their own GPU-accelerated workload on a single NVIDIA A100 GPU. Each of the eight GPUs in the DGX A100 supports up to seven GPU instances, for a total of 56 instances.

Consider how these new capabilities might enable your institution. For example, by offering GPU-accelerated sessions to each student in a remote learning course. The traditional classroom of lab PCs might be replaced by a single DGX system.

Each DGX A100 system can serve 56 separate Jupyter notebooks, each with GPU performance similar to a Tesla T4. Microway deploys these systems with a workload manager that supports resource sharing between classroom requests and other types of work, so the full horsepower of the DGX can be leveraged for faculty research when class is not in session. Further, your IT team no longer needs to support dozens of physical workstations – the computer resources are centralized and can be managed from a single location.

Flexible Platforms Support Diverse Workloads

These types of high-performance computer labs are likely familiar for curriculums in traditionally compute-demanding fields (e.g., computer science, engineering, computational chemistry). However, we hear increasing calls for these computational resources from other departments across campuses. As the power of data analytics and machine learning become utilized in other fields, this type of deployment might even be an opportunity for cost-sharing between traditionally disconnected departments.

This year, we’re all being challenged to conceive of new, seamless methods for remote access, collaboration, and instruction. Our team would be thrilled to be a part of transformation at your institution. The first DGX A100 units in academia will be at the University of Florida next month, where Microway will be performing the integration. I know NVIDIA’s DGX A100 systems will prove invaluable to current GPU users, and I hope they will also extend into the hands of graduate and even undergraduate students. Let’s talk about what’s possible now.