Over the past decade, and particularly over the past several years, Deep learning applications have been developed for a wide range of scientific and engineering problems. For example, deep learning methods have recently increased the level of significance of the Higgs Boson detection at the LHC. Similar analysis is being used to explore possible decay modes of the Higgs. Deep Learning methods fall under the larger category of Machine Learning, which includes various methods, such as Support Vector Machines (SVMs), Kernel Methods, Hidden Markov Models (HMMs), Bayesian Methods, along with regression techniques, among others.

Deep learning is a methodology which involves computation using an artificial neural network (ANN). Training deep networks was not always within practical reach, however. The main difficulty arose from the vanishing/exploding gradient problem. See a previous blog on Theano and Keras for a discussion on this. Training deep networks has become feasible with the developments of GPU parallel computation, better error minimization algorithms, careful network weight initialization, the application of regularization or dropout methods, and the use of Rectified Linear Units (ReLUs) as artificial neuron activation functions. ReLUs can tune weights such that the backpropagation signal does not become too attenuated.

Application of Trained Deep Networks

Trained deep networks are now being applied to a wide range of problems. Some areas of application could instead be solved using a numerical model comprised of discrete differential equations. For instance, deep learning is being applied to the protein folding problem, which could be modeled as a physical system, using equations of motion (for very small proteins), or energy-based minimization methods (for larger systems). Used as an alternative approach, a deep network can be trained on correctly folded tertiary protein structures, given primary and secondary structure, as input data. The trained network could then predict a protein’s tertiary structure [Lena, P.D., et al.].

Neural networks offer an alternative, data-based method to solve problems which were previously approached using physical numerical models, or other machine learning methods. A distinction between data-based models and physical models is that data-based models can be applied to problems for which no well-accepted, or practical, predictive theoretical framework exists.

Deep Neural Networks as Biological Analogs

Aside from providing an alternative data-based approach to problems for which no discrete physical model may exist, deep learning applications can reproduce some function of a real-world biological neural network analog, such as vision, or hearing.

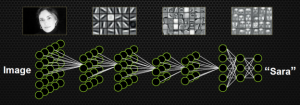

In both biological and artificial visual networks, the lower convolutional layers detect the most basic features, such as edges. Convolutional layers are separated by pooling layers, which add some robustness to feature detection, so that if a feature is translated slightly, or rotated a bit, it will still be detected. Successive convolution/feature layers build from edges to form features with multiple edges, or curves. In most network architectures, pooling layers are placed between convolutional layers. This is done to add robustness to each feature detection layer. The highest convolutional layers are built upon combinations of features from previous layers. The highest layers build the most complex feature detectors. The weights in the highest layers become set, through training pressure, to become detectors for complex shapes, such faces, chairs, tires, houses, doors, etc. The layers in a deep visual classification network will separate out image features from lowest to highest complexity. If a network does not have sufficient depth, then there will not be good separation and the classifications will be too blurred and unfocused.

Deep Learning Applications in Science and Engineering

Despite the advances of the past decade, deep learning cannot presently be applied to just any sort of research problem. Some problems still have either not been expressed in an information framework that is compatible with deep learning, or there are not yet deep network architectures that exist which can perform the kinds of functions needed. Deep learning has shown surprising progress, however. For example, a recent advance in deep learning surpassed nearly everyone’s expectation, when Google DeepMind’s “AlphaGo” AI player defeated the World Champion, Lee Sedol, in the game of Go. This milestone achievement was thought to be decades away, not mere months.

The following sections are not meant to be a complete description of deep learning applications, but are meant to demonstrate the wide range of scientific research problems to which deep learning can be applied. Recent major developments in algorithms, methods, and parallel computation with GPUs, have created the right conditions which precipitated the recent succession of major advances in the field of Artificial Intelligence.

Deep Learning in Image Classification

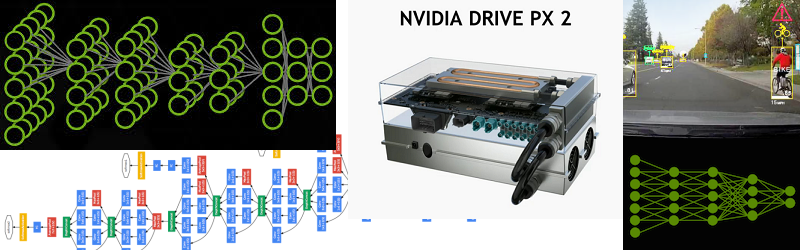

Image Classification uses a particular type of deep neural network, called a convolutional neural network (CNN). Figure 1 illustrates the basic organization of a CNN for visual classification. The actual network for this sort of task would have more neurons per layer.

It is, however, possible to scale an image down to some level without losing the essential features for detection. If the features in an image are too large or too small for the filter sizes, however, then the features will not be detected. This is a subtle point which presents a problem for visual recognition. Recent approaches have addressed this problem by having the network construct various filters of the same feature but at different size scales. For a given trained neural network, images must be scaled such that their feature sizes match those in the highest layer convolutional filters. The pooling layers impart some robustness for feature detection, which allow for some small amount of feature rotation and translations. However, if the face is flipped upside down, nothing will work, and the network will not correctly identify the face. This is in fact a methodological difficulty, and in order to address it, the network must develop feature detectors for the same feature, but at different rotations. This can be done by including rotations of the image into the training set. A similar problem arises if a face is rotated not in the plane, but out of the plane. This introduces distortions of key facial features, which would once again foil a network trained only on forward facing faces. These problems of rotational and scale invariance are active areas of research in the area of object recognition.

Looking at the network in Figure 1, the three output neurons indicate, in coded form, the name of the person whose face is presented to the network. The grayscale values in the grayscale images indicate connection weight values.

In one research development, de-noising autoencoders were used to remove fog from images taken live from autonomous land vehicles. A similar solution was used for enhancing low-light images [Lore, K., et al.] Each square tile represents a different convolutional filter, which is formed under training pressure to extract certain features. The convolutional filters in the lowest layers pick out edges. Higher layers detect more complex features, which could consist of combinations of edges, to form a nose, or chin, for example.

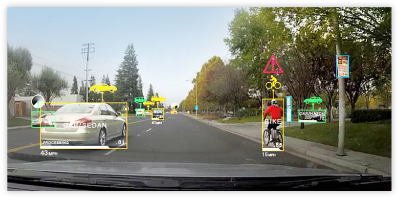

Recent major advances in machine vision research include image content tagging (Regional Convolutional Neural Networks, or RCNNs), along with development of more robust recognition of objects, in the presence of noise, applied rotation, size variation, etc. Scene recognition deep networks are currently being used in self-driving cars (Figure 2).

Deep Learning in Natural Language Processing

Significant progress has also been made in Natural Language Processing (NLP). Using word and sentence vector representations along with syntactic tree parsing, NLP ANNs have been able to identify complex variations in written form, such as sarcasm, where a seemingly positive sentence takes on a sudden negative meaning [Socher, R., et al.]. The meaning of large groups or bodies of sentences, such as articles, or chapters from books, can be resolved to a group of vectors, summarizing the meaning of the text.

In a different NLP application, a collection of IMDB movie reviews were used to train a deep network to evaluate the sentiment of movie reviews. An approach for this was examined using Keras in a previous Microway Tech Tips blog post. Primary applications of NLP deep networks include language translation, and sentiment analysis.

Transcription of video to text, in the absence of audio is an active area of research which involves both Image Classification and NLP. Deep networks for NLP are usually recursive neural networks (RNNs), having the output fed back into the input layer. For NLP tasks, the previous context partly determines the best vector for representing the next sentence, or word. For a review of RNNs, see, for example, The Unreasonable Effectiveness of Recurrent Neural Networks, by Andrej Karpathy.

Language Translation

Encoder-Decoder frameworks have been developed for encoding English words, for example, into reduced vector representations, and then decoding the reduced representations of English words into French words, using a French decoder. The encoding/decoding can be done between any two languages. The reduced representation can be thought to be a universal encoding, which encodes the word into a distributed pattern in the network [Cho, K., et al.]. This sort of distributed encoded pattern has been referred to as constituting a “thought”, or an internal encoded representation of data.

Automatic Speech Recognition

Automatic Speech Recognition (ASR), is another research area seeing deep networks producing results better than any method previously used, including Hidden Markov Models. Previously, ASR used Hidden Harkov Models, mixed with ANNs, in a hybrid method. Because deep networks for ASR can now be trained within practical timescales, deep learning is now producing the best results. Long Short Term Memory (LSTM) [Song, W., et al & Sak, H., at al] and Gated Recurrent Units (GRU) play an important role in improving ASR deep networks, by helping to retain information from more than several iterations ago. The TIMIT speech dataset is used as a primary data source for training ASR deep networks.

Deep Learning in Scientific Experiment Design

Using a deep learning approach, machines can now provide direction on the design of scientific experiments. Consider for example, a recent deep learning approach taken by materials scientists, where new NiTi-based shape memory alloys were explored for lower thermal diffusivity [Xue, D., et al.]. From a dataset of 22 known NiTi-based alloys, a deep network was trained to report their 22 measured thermal diffusivity values. Particular physical properties of the 22 alloys were used as input parameters for training the network.

With the deep network trained on the known alloys, it was used to determine the diffusivity values for a large number of theoretical alloys. Four alloys were selected from the predicted set which showed the lowest estimated thermal diffusivity values. Real experiments were then carried out on these four theoretical alloys, and their thermal diffusivity values were measured. The data for these four new alloys, with known thermal diffusivities were then added to the training set, and the network was re-trained in order to improve the accuracy of the deep network. After the experiment proceeded in iterations of four unexplored alloys, the final remarkable result was reached, where 14 of the 36 new alloys had a smaller thermal diffusivity than any of the 22 known alloys in the original data set.

High Throughput Screening Experimentation will be improved with Deep Learning

Research problems which have large combinatorics of possible experiments, such as the investigation of new NiTi shape memory alloys, are likely to be expressible into an information framework conducive for solving with deep learning. Once trained, the deep networks will help the investigator sort through the vast combinatoric landscape of experiment design possibilities. Trained deep networks will estimate which experiments will result in the best property being sought after. Once the best candidate experiments are performed, and the property of interest is measured, the deep network can be re-trained with the new data. Instead of starting with a total of twenty 384-well plates, for example, the researcher may only need one quarter of this amount, or may instead fill the twenty plates with more promising molecular candidates.

Deep Learning in High Energy Physics

The discovery of the Higgs Boson marked a major achievement for the Standard Model of high energy particle physics. First detected in 2011/2012 at the CERN LHC, the elusive particle was hypothesized to be responsible for imparting the property of mass, onto other particles (except for massless particles). Detecting the Higgs Boson with a high enough level of certainty to declare it an actual discovery required examining its decay modes in millions of high energy particle collisions, where two protons collided at sufficiently high energy to create two heavy Tau leptons, which then spontaneously decayed into lighter leptons, the muon and the electron. Through the course of these spontaneous decays, tell-tale signatures could be discerned in the data, indicating that the resulting particles and momenta were very likely to have come from the decay of a Higgs Boson.

Machine Learning techniques have been used in particle physics data analysis since their development. The application of deep networks and deep learning is an extension of machine learning methods which have previously been widely used for this sort of data analysis [Sadowski, P., et al. & Sadowski, P., et al.]

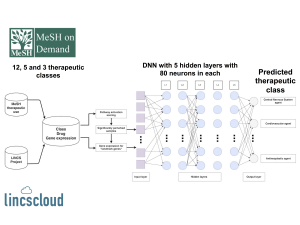

Deep Learning in Drug Discovery

Deep Learning is beginning to see applications in pharmacology, in processing large amounts of genomic, transcriptomic, proteomic, and other “-omic” data [Mamoshina, P, et al.]. Recently, a deep network was trained to categorize drugs according to therapeutic use by observing transcriptional levels present in cells after treating them with drugs for a period of time [Aliper, A, et al.] (Figure 3). Deep learning has also been used to identify biomarkers from blood which are strong indicators for age [Putin, E., et al.].

Deep Learning is Just Getting Started

In addition to the applications mentioned here, there are numerous others, including robotics, autonomous vehicles (see Figure 4), genomics, bioinformatics [Alipanahi, B., et al.], and cancer screening, for example. The 21st International Conference on Pattern Recognition (ICPR2012) hosted a challenge for detecting breast cancer cell mitosis in histological images. In April 2016, the Massachusetts General Hospital (MGH) announced it would begin a major research effort into exploring ways to improve health care and disease management through application of artificial intelligence and deep learning to a vast and growing volume of personal health data. MGH will be using the NVIDIA DGX-1 Deep Learning Appliance as the hardware platform for the research initiative.

Want to use Deep Learning?

Microway’s Sales Engineers are excited about deep learning, and we are happy to help you find the best solution for your research. Let us know what you’re working on and we’ll help you put together the right configuration.

References

1. Lena, Pietro D., Ken Nagata, and Pierre F. Baldi. “Deep spatio-temporal architectures and learning for protein structure prediction.” Advances in Neural Information Processing Systems. 2012.

2. Lee, Honglak, Roger Grosse, Rajesh Ranganath, and Andrew Y. Ng. “Convolutional deep belief networks for scalable unsupervised learning of hierarchical representations.” In Proceedings of the 26th annual international conference on machine learning, pp. 609-616. ACM, 2009.

3. Lore, Kin Gwn, Adedotun Akintayo, and Soumik Sarkar. “LLNet: A Deep Autoencoder Approach to Natural Low-light Image Enhancement.” arXiv preprint arXiv:1511.03995 (2015).

4. Socher, Richard, et al. “Recursive deep models for semantic compositionality over a sentiment treebank.” Proceedings of the conference on empirical methods in natural language processing (EMNLP). Vol. 1631. 2013.

5. Cho, Kyunghyun, et al. “On the properties of neural machine translation: Encoder-decoder approaches.” arXiv preprint arXiv:1409.1259 (2014).

6. Song, William, and Jim Cai. “End-to-End Deep Neural Network for Automatic Speech Recognition.”

7. Sak, Haşim, Andrew Senior, and Françoise Beaufays. “Long short-term memory based recurrent neural network architectures for large vocabulary speech recognition.” arXiv preprint arXiv:1402.1128 (2014).

8. Dezhen Xue et al., Accelerated search for materials with targeted properties by adaptive design, Nature Communications (2016). DOI: 10.1038/ncomms11241

9. Sadowski, Peter J., Daniel Whiteson, and Pierre Baldi. “Searching for higgs boson decay modes with deep learning.” Advances in Neural Information Processing Systems. 2014.

10. Sadowski, P., Collado, J., Whiteson, D., and Baldi, P., Deep Learning, Dark Knowledge, and Dark Matter, JMLR: Workshop and Conference Proceedings 42:81-97, 2015

11. Mamoshina, Polina, et al. “Applications of deep learning in biomedicine.” Molecular pharmaceutics 13.5 (2016): 1445-1454.

12. Aliper, Alexander, et al. “Deep learning applied to predicting pharmacological properties of drugs and drug repurposing using transcriptomic data.” Molecular pharmaceutics (2016).

13. Putin, Evgeny, et al. “Deep biomarkers of human aging: Application of deep neural networks to biomarker development.” Aging 8.5 (2016).